Responsible AI 101 [+5 Critical Questions to Ask Vendors]

Our planet has gone through five major extinction events that dramatically changed the course of life on Earth. With each event, we saw the very essence of life either push forward and become better—or vanish entirely. Similarly, the technological landscape is experiencing a dramatic shift right now with artificial intelligence (AI).

At Glassbox, we've seen it time and again. Companies that refuse to adopt and adapt to new technology fall behind and eventually go out of business. As AI continues at an unprecedented pace, it’s time to embrace innovation and make it the cornerstone of your customer experience (CX) strategy.

However, it’s not just about integrating AI into every business unit. It’s about doing so responsibly while considering all of its potential risks and challenges.

In this guide, we’ll explore the concept of responsible AI and provide guidance on aspects like:

The importance of responsible AI for enterprises in 2024

What the integration of responsible AI looks like for CX teams

Questions to ask your next AI-native vendor to assess their product

What is responsible AI?

AI has the potential to bring many benefits to both businesses and society at large, but without the right guardrails, it can also do more harm than good.

Responsible AI is a framework that recognizes this reality and ensures that companies implement and deploy AI ethically and legally. The goal is for vendors to design AI systems that respect individual rights, promote social good and mitigate potential negative effects.

For example, in 2016, Microsoft released Tay, a conversational chatbot for Twitter. Its debut was short-lived—the company had to take it down within 24 hours. The reason? Tay learned from its interactions with users and started producing outputs that consisted of racist, misogynistic and politically incorrect remarks.

The chatbot wasn’t developed with responsible AI principles in mind, so it lacked sufficient guidelines on what data to ingest and process and what data to ignore during training.

Similarly, when it comes to CX, you have to think about the platforms you’re using—especially those powered by AI as they tend to process large amounts of confidential customer data. If these AI systems aren’t developed and used responsibly, they can lead to biased decision-making, privacy violations or even discriminatory treatment of customers.

Here are the guiding principles of responsible AI (courtesy of Microsoft) that you should consider:

Fairness and non-discrimination: Treat all customers and customer interactions equally, without bias. This means AI-native companies have to train systems using data that’s diverse and representative, while regularly testing the output for instances of bias and discrimination.

Accuracy and reliability: The AI systems that your vendors offer should be reliable and accurate in their output. AI hallucinations are common so the systems you use should be tested rigorously to ensure you can trust the output at any given time.

Transparency and explainability: Be transparent about how you’re using AI and how the AI systems work. For example, do stakeholders and customers understand how you collect, use and protect their data? Also, explain how your AI systems make decisions and generate insights. This information may affect how customers use the model.

Privacy and security: Your organization likely handles sensitive customer and company data. Choose a security system with secure data storage, encryption and access controls that's compliant with relevant data protection regulations.

Human oversight and accountability: While AI can automate certain tasks and processes, you still have to maintain oversight. This means regularly monitoring and auditing AI systems for accuracy, fairness and compliance.

Inclusive collaboration: Involve stakeholders with diverse backgrounds and experiences to strategize, train and test these systems. This approach allows companies to create a product that addresses the needs of many, expanding the use cases of the product.

What can responsible AI do for enterprises?

Given the scale of enterprise operations, teams can face several challenges when adopting AI-enabled or AI-native tools. Some of the key issues include:

Limited understanding of how the AI models work, since the explanation offered by vendors (or lack thereof) makes these models difficult to interpret

A lack of compliance with regulatory standards such as the EU AI Act and The Health Insurance Portability and Accountability Act (HIPAA)

A potential vulnerability to threats such as data poisoning, model inversion and cybersecurity attacks

Data security around model training, as some AI models use the data you input to continually train their systems—risking potential leakage of confidential data

When you consider that 73% of consumers expect more interactions with AI and are ready to use it in their daily lives, there’s no escaping these challenges. This is why it’s time to get serious about responsible AI usage. Here’s how it can help your organization:

Integrate compliance within your systems

Compliance is key. With the rise of regulations such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States, you need to choose platforms that work on the principles of governance, risk and compliance (GRC).

These regulations require you to handle personal data in a way that enables data security, provisions for user consent and transparency in data usage. If you use a product that integrates these aspects within its development process, you can easily meet these legal requirements.

For example, to help you comply with GDPR, Glassbox gives you the option to block session recordings for specific IPs from the EU or California. This way you're not collecting data from regions you’re not supposed to—preventing the usage of this data for any other purposes.

Avoid unintended consequences of AI

A Conversica report found that 77% of executives are worried about AI products generating false information. In addition, they’re also apprehensive about the accuracy of current-day data models and the lack of transparency in building them.

These are legitimate concerns as the consequences are multifold. Since AI lets you automate and operate tasks at scale, any issues with the products you use will also cause problems at scale. In the end, these errors cost you money, time and effort—while resulting in an erosion of trust.

For instance, if an AI model is trained on incomplete or biased data, it could affect your team’s decision-making capabilities and lead to biased or negative outcomes. Responsible AI practices alleviate these issues from the get-go, making the model fair and accessible.

Mitigate risks associated with AI usage

One of the biggest risks with AI usage is the potential for malicious actors to exploit system vulnerabilities. This can include attacks designed to manipulate or deceive AI algorithms, steal sensitive data or disrupt business operations.

A Hidden Layer report found that 91% of IT leaders consider AI models crucial to business success. However, the same report also concluded that 77% of companies have already faced a data breach due to AI usage.

Also, FSI companies tend to have concerns about nascent AI regulations, ethical implications and large investments required with integration into their tech stack.Despite these risks, we don’t expect AI usage to decline, even though enterprises are wary and slowly adopting these tools.

The key is to choose a product that’s built using responsible AI practices. When you adopt products that take a proactive approach to AI governance, you embed risk management into every stage of the digital transformation journey.

Build a positive brand reputation

Consumers and business leaders are becoming increasingly aware of the larger implications of the products they use and veer toward brands that align with their values.

When you consider that 57% of consumers constantly fear being scammed, it makes sense to prioritize data privacy and security. This includes evaluating your current vendors to see how they’re integrating responsible AI within their products.

Taking this approach shows that you’re a company that prioritizes the well-being of its customers and society at large. It can lead to increased customer loyalty, as individuals are more likely to trust and engage with brands that they perceive as ethical and responsible.

Innovate faster (without breaking things)

As AI adoption is increasing across the board, there’s massive pressure on enterprises to innovate quickly and stay ahead of the curve. That said, it can be tempting to use the “move fast and break things” mentality without thinking through your options carefully.

The popularity of ChatGPT has led many companies to re-assess their AI policies and usage. A Gartner report found that 66% of enterprise risk executives see generative AI as an emerging risk because of intellectual property violations, data privacy and cybersecurity threats. The companies that prioritize responsible AI are the ones that’ll overcome this obstacle.

What does responsible AI look like in practice to achieve autonomous CX?

Any responsible AI organization will integrate the core principles in everything they do. At Glassbox, we aim to completely change the way brands connect and engage with their customers online. But that’s only possible if we use AI responsibly, especially in regulated industries like financial services (FSI).

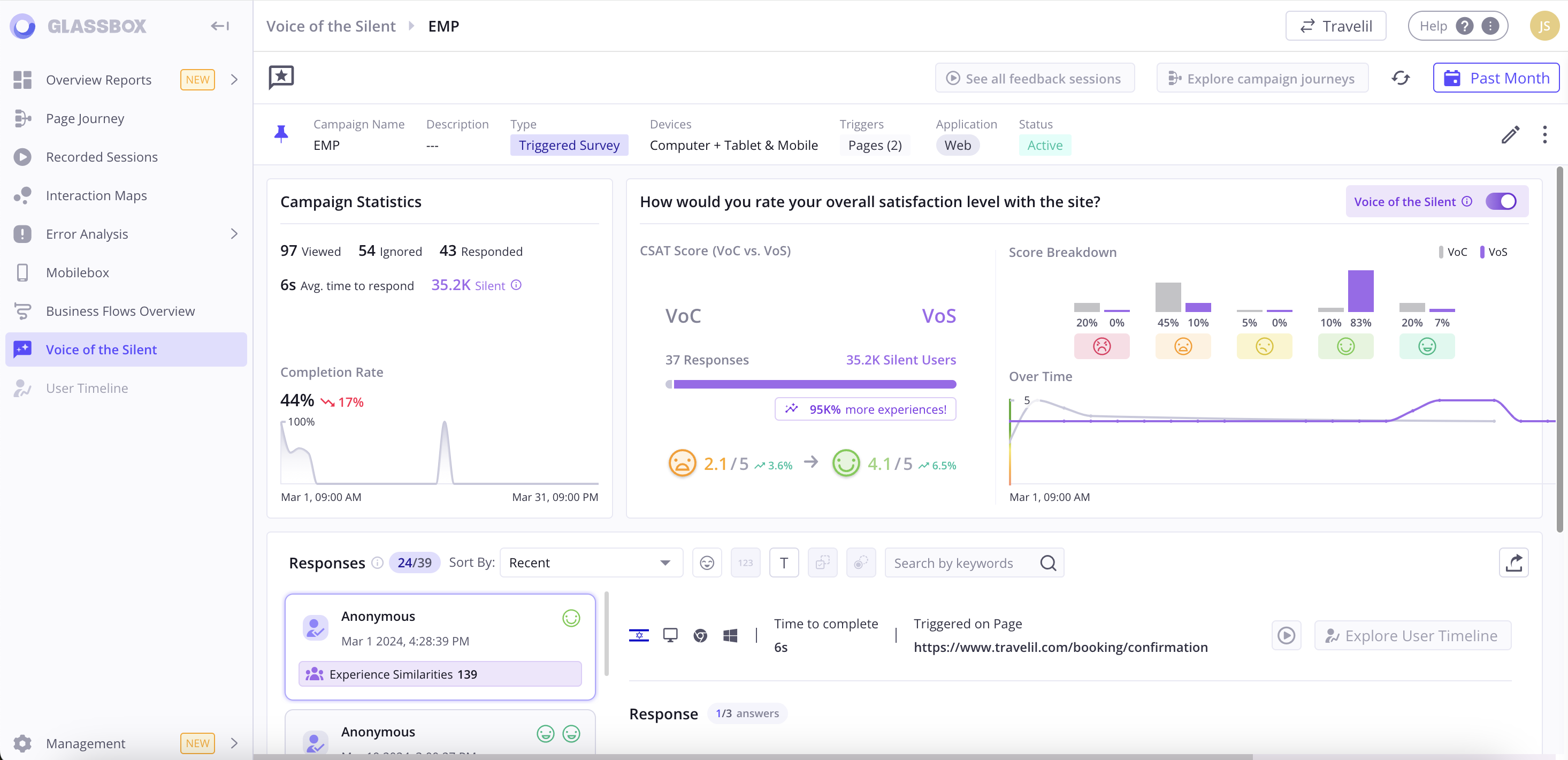

For instance, our internal analysis found that only 4% of your customers will ever provide direct feedback about their digital experience with your product/brand. That’s why we introduced the Voice of the Silent (VoS) feature where you can use our AI model to compare rated interactions from this smaller segment to similar sessions across your entire user base.

This approach lets you make more data-driven decisions based on actual interactions customers are having with your brand. It ensures fairness in your decision-making process and prevents any bias from creeping into these processes.

Take a self-guided product tour

Our goal in developing responsible AI practices around VoS and the rest of our product was to enable these models to extract some of the richest and most advanced customer insights available, while simultaneously safeguarding sensitive information. We do this with:

No data sharing policy: We only process data within customer prompts without drawing on third-party sources, ensuring that external apps cannot access or share your data.

Single-tenant private endpoint: We use a private Open AI instance on Microsoft Azure that operates through a secure endpoint only. It helps us keep all your data secure and private at all times.

Opt out of model training: Instead of using customer data to train our models, we rely on up-to-date, pre-trained modules. Plus, you also have the option to opt out of data-sharing capabilities for personalized recommendations, giving you more control over your data and its usage.

Robust monitoring systems: We continuously monitor all interactions with our AI provider using advanced security systems. This allows us to look for potential leaks or vulnerabilities and mitigate them before they become an issue.

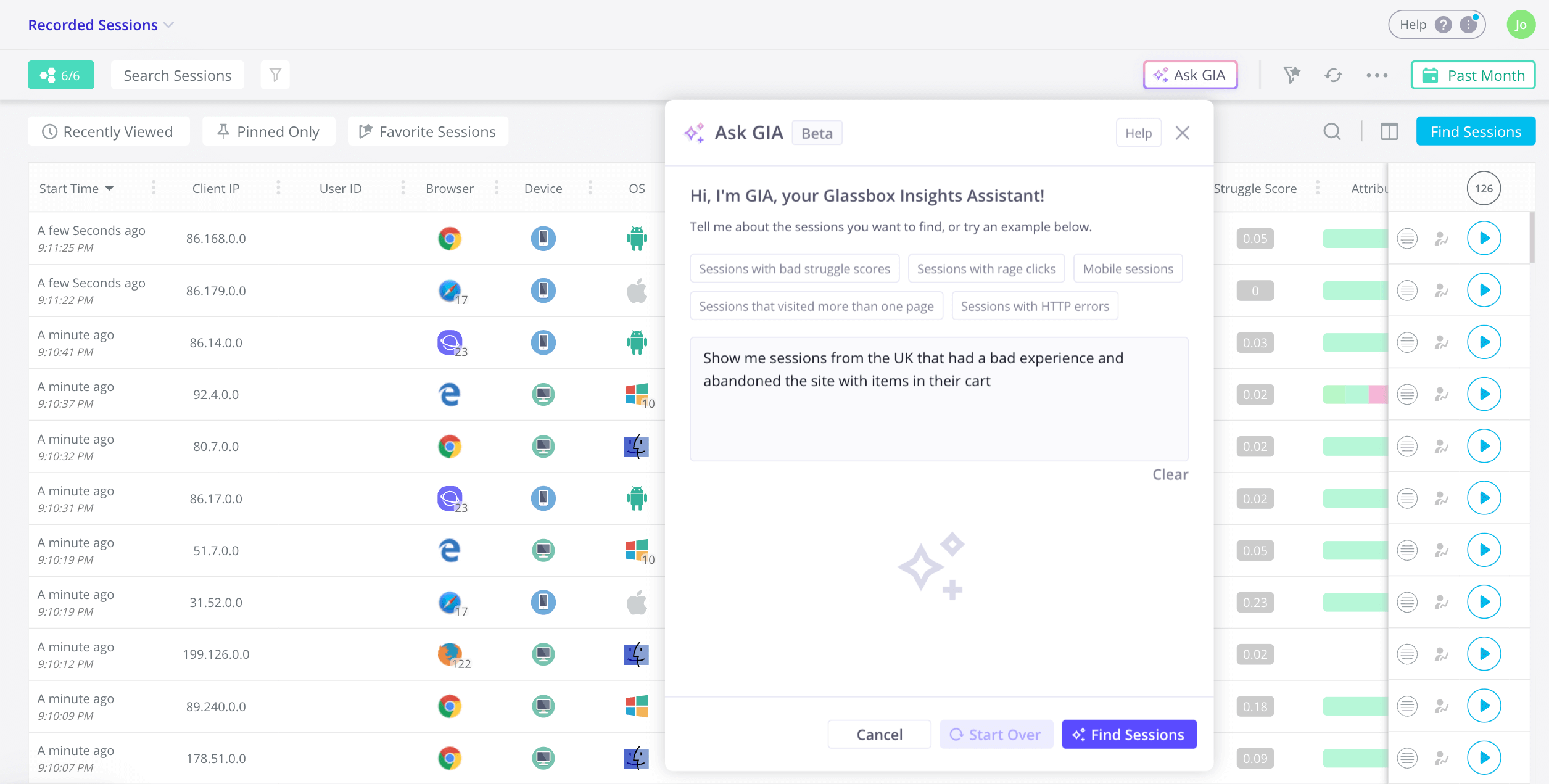

As for fairness and inclusive collaboration, we have features like the Glassbox Insights Assistant (GIA) that democratizes data and destroys silos within the enterprise. Now, any business user can simply ask it a question and get top takeaways and recommendations for customer struggles or points of friction in seconds. This dramatically speeds up the analysis process as the AI gives you insights you can take immediate action on, all within a familiar chat-based interface.

The idea is to empower product, engineering and marketing teams with customer intelligence that lets them build better experiences without compromising on responsible AI principles.

5 questions you should ask when considering an AI-native vendor

To help you evaluate potential AI vendors or partners for your business, we’ve compiled a list of questions for you to ask:

1. Can you elaborate on your AI instance?

Why should you ask this:

Understanding how the AI model has been trained and how it works is crucial. AI instances can be private or public. In both cases, AI models will be trained and fine-tuned using your corporate data. However, with private instances, only your organization can access the data and output. If it's a public AI instance, sensitive data like your customer’s Personal Identifiable Information (PII) is shared with the AI service provider, who can then use it to train future models accessible to third parties.

What a good answer looks like:

Responsible AI platforms always rely on a private AI instance to contain all customer prompt processing within a secure environment.The vendor should provide a clear explanation of the instance including:

The type of AI model used

The training data used and its sources

The quality assessment process

2. How do you ensure the incorporation of responsible AI during development?

Why it's a good question to ask:

Responsible AI incorporates multiple principles, from fairness to privacy. Ideally, your vendor should speak to the integration of these principles during the development, quality assessment and deployment process. This will help you gauge if a vendor is serious about using AI ethically within their organization and by extension, with their customers.

What a good answer looks like:

Responsible AI organizations conduct regular bias testing and implement mitigation strategies to ensure that the model is trained on high-quality data. They should be transparent about how the AI model makes decisions and provide robust explanations for the final output it provides—for example, how it reads the data, processes it and determines what to recommend.

3. What kind of data do you process and who can access this data?

Why it's a good question to ask:

Data is the foundation of any AI system, so you need to know what data the vendor uses and who has access to it within their organization. It’ll help you assess whether it’s compliant with relevant regulations such as GDPR or CCPA and if it aligns with your enterprise’s policies and values.

What a good answer looks like:

Your vendor should provide an overview of the types of data collected (PII, behavioral, etc.) and how it’s acquired. They should also explain:

How they store data: Your vendor should explain how their AI models ingest the data you’ve provided and store it. For example, do they store it in a structured database like MySQL or PostgresQL? If so, how do they encrypt the data and what encryption algorithms do they use?

Where they store it: Ask your vendor if they are storing the data in a physical or virtual location (like the cloud). In either case, they should give you information on who the storage provider is and how the storage policies comply with regulatory requirements. For example, "We store our data in Amazon Web Services (AWS) data centers located in the United States, specifically in the US-East-1 (Northern Virginia) and US-West-2 (Oregon) regions.”

How they protect it in transit and during storage: Your vendor should have a clear answer on whether the data is encrypted during transit from one storage location to another. For instance, Glassbox encrypts every AI instance irrespective of the deployment model, allowing us to protect the integrity of your data at all times.

If any members of their organization have access, they should clarify how access controls work to prevent unauthorized use.

4. What are the give-up rights for your software?

Why it's a good question to ask:

Give-up rights refer to a time when you give up certain rights or control over AI systems, especially when it comes to decision-making processes. This lets you assess how much control you have and whether a vendor is flexible with their policies in restricting or expanding these capabilities.

What a good answer looks like:

Responsible AI companies have clear guardrails over how they process customer data. For instance, at Glassbox, we use a stateless architecture which eliminates data storage between sessions. Since our communication protocol with Azure AI is string-based, there’s no data transfer or storage—ensuring that the AI assistant provides recommendations based on pre-trained models.

5. How do you respond in the event of a data breach or system failure?

Why it's a good question to ask:

No matter how robust a vendor's security measures are, there is always a risk of data breaches or system failures. When you ask your vendor about their incident response mechanisms, you’ll get an idea of how prepared they are for such situations and whether they can contain a problem quickly.

What a good answer looks like:

Your vendor should provide information on their incident response plan, including their procedures for detecting issues and isolating the root causes. In addition, they should shine light on the communication protocol for customers and remediation measures to resolve issues quickly.

Leverage responsible AI principles to turn customer friction into delight

Responsible AI is no longer a mere buzzword or a nice-to-have feature. It’s a critical component of successful AI adoption in enterprises, particularly in data-sensitive sectors like FSI.

Conversica’s report indicates that only 28.4% of business leaders are very familiar with their vendors’ responsible AI parameters. So, it’s time to critically evaluate how you’re integrating AI into your business, especially when it comes to handling sensitive customer data and insights.

In the future, the companies that succeed will be those that embrace responsible AI as a core value and a key driver of their business strategy. When you ask the right questions and implement the right practices, you’ll be able to see better, learn sooner and act faster using AI-driven insights.